Self-driving technology is a holy grail that promises to forever change the way we interact with cars. Thus far, there’s been plenty of hype and excitement, but full vehicles that remove the driver from the equation have remained far off. Tesla have long posited themselves as a market leader in this area, with their Autopilot technology allowing some limited autonomy on select highways. However, in a recent announcement, they have heralded the arrival of a new “Full Self Driving” ability for select beta testers in their early access program.

Taking Things Up A Notch

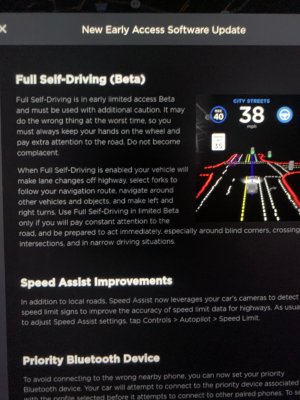

The new software update further extends the capabilities of Tesla vehicles to drive semi-autonomously. Despite the boastful “Full Self Driving” moniker, or FSD for short, it’s still classified as a Level 2 driving automation system, which relies on human intervention as a backup. This means that the driver must be paying attention and ready to take over in an instant, at all times. Users are instructed to keep their hands on the wheel at all times, but predictably, videos have already surfaced of users ignoring this measure.

The major difference between FSD and the previous Autopilot software is the ability to navigate city streets. Formerly, Tesla vehicles were only able to self-drive on highways, where the more regular flow of traffic is easier to handle. City streets introduce far greater complexity, with hazards like parked cars, pedestrians, bicycles, and complicated intersections. Unlike others in the field, who are investing heavily in LIDAR technology, Tesla’s system relies entirely on cameras and radar to navigate the world around it.

A Very Public Beta Test

Regulations are in place in many jurisdictions to manage the risks of testing autonomous vehicles. Particularly after a pedestrian was killed by an Uber autonomous car in 2018, many have been wary of the risks of letting this technology loose on public roads.

Tesla appear capable of shortcutting this requirement, by simply stating that the driver is responsible for the vehicle and must remain alert at all times. The problem is that this option ignores the effect that autonomous driving has on a human driver. Traditional aids like cruise control still require the driver to steer, ensuring their attention is fully trained on the driving task. However, when the vehicle takes over all driving duties, the human in the loop is left with the role of staying vigilant for danger. Trying to continuously concentrate on such a task, while not being actually required to do anything most of the time, is acutely difficult for most people. Ford’s own engineers routinely fell asleep during testing of the company’s autonomous vehicles. It goes to show that any system that expects a human to be constantly ready to take over doesn’t work without keeping them involved.

Cruise and Waymo Going Driverless at the Same Time

Tesla’s decision to open the beta test to the public has proved controversial. Allowing the public to use the technology puts not just Tesla owners, but other road users at risk too. The NHTSA have delivered a stern warning, stating it “will not hesitate to take action to protect the public against unreasonable risks to safety.” Of course, Tesla are not the only company forging ahead in the field of autonomous driving. GM’s Cruise will be trialing their robotic vehicles without human oversight before the year is out, and Alphabet’s Waymo has already been running an entirely driverless rideshare service for some time, with riders held to a strict non-disclosure agreement.

The difference in these cases is that neither Cruise or Waymo are relying on a human to remain continually watchful for danger. Their systems have been developed to a point where regulators are comfortable allowing the companies run the vehicles without direct intervention. This contrast can be perceived in two ways, which each have some validity. Tesla’s technology could be seen as taking the easy way out, holding a human responsible to make up for shortcomings in the autonomous system and protect the company from litigation. Given their recent updates to their in-car camera firmware, this seems plausible. Alternatively, Tesla’s approach could be seen as the more cautious choice, keeping a human in the loop in the event something does go wrong. However, given that evidence is already prevalent that this doesn’t work well in practice, one would need to be charitable to hold the latter opinion.

A conservative view suggests that as the technology rolls out, we’ll see more egregious slip ups that a human driver wouldn’t have made — just like previous iterations of Tesla’s self driving technology. Where things get hairy is determining if those slip-ups deliver a better safety record than leaving humans behind the wheel. Thus far, even multiple fatalities haven’t slowed the automaker’s push, so expect to see development continue on public roads near you.

Recent Comments